The discourse about AI is weirdly terrible

And the AI experts seem to be the worst offenders

There is a raging debate on Twitter, in the media, and now moving on to governments about AI. I discussed one round of it last week when AI pioneer Geoff Hinton switched sides in the debate. My take, I guess not surprisingly, is that the debate could use some more economics. A colleague reminded me that was basically my same take during the pandemic. On that score, one important expert, Anthony Fauci, now agrees with that take.

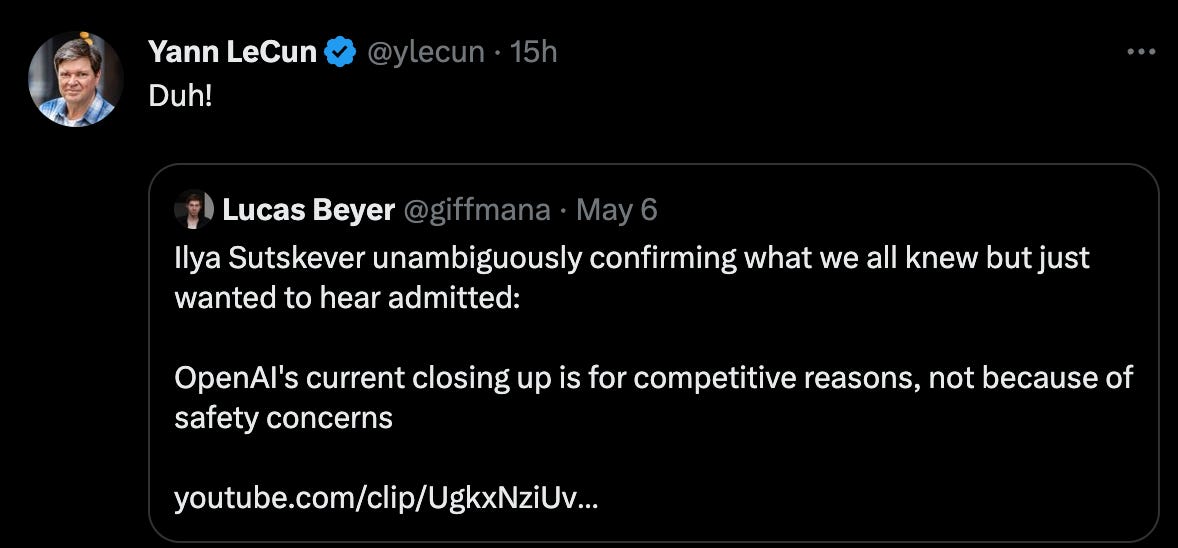

But even putting that aside, the debate between the AI experts is like “na” followed by “na ha.” I can’t easily embed tweets any more in Substack (thanks, Elon!), so let me screenshot some examples.

These are self-selected from both sides. It is to give you a flavour of what is going on. There are, of course, from each of these people more comprehensive statements elsewhere. But lots of people weigh in.

A fair claim is that these are out of context. A fair response is that it is generous to say that there was context.

My point is that we don’t seem to be getting anywhere. The truth surely is that we don’t really know what the risks are. But the one thing I have noticed about computer scientists, in general, is that when it comes to these sorts of discussions, they tend to see things as black and white (like some weird caricature of binary thinking being the mark of those who work with computers).

At its heart, it is a debate about regulation

Here is what I think is going on here. The discussion is about risks, but the underlying debate is really about regulation. Normally those debates are (a) is there a problem? (b) does this problem require a collective solution? and then (c) do we have any idea whether an implementable collective solution exists? The discussion above is stuck on (a) and is often at the level of “I have a belief, and here are my credentials.”

When we face uncertainty, what we normally do is acknowledge it and pose experiments to test that uncertainty. For instance, if you are worried LLMs might cause disinformation, you would propose some experiments or find some evidence that they have actually caused disinformation. Yes, you may end up with a disinformation problem before you actually intervene, but when there is uncertainty, and there are costs to intervention, you take the hit for the sake of learning.

Ditto for the scenario where AI is going to kill us all. I don’t know how to conduct that experiment, but in other situations where we are worried about something killing us all — like viruses or nuclear bombs — we did some investigation. Now, as the climate change debate shows us, that isn’t always persuasive. But at least it gets us to a better level of discourse and understanding. I just don’t think we are going to ‘thought experiment’ our way through this one.

Then there are the rich folk

Here’s one example.

And he is joined by Elon Musk, Bill Gates, Warren Buffet and others. They are worried about AI and want regulation.

Isn’t that interesting? Some of these tend to be on the anti-regulation side of most other debates. It is not surprising.

But raise the spectre of the invention of something that will be more powerful than them, and they are running for government legislation.

Here is where this interesting piece in The New Yorker from Ted Chiang resonated with me.

I’m not very convinced by claims that A.I. poses a danger to humanity because it might develop goals of its own and prevent us from turning it off. However, I do think that A.I. is dangerous inasmuch as it increases the power of capitalism. The doomsday scenario is not a manufacturing A.I. transforming the entire planet into paper clips, as one famous thought experiment has imagined. It’s A.I.-supercharged corporations destroying the environment and the working class in their pursuit of shareholder value. Capitalism is the machine that will do whatever it takes to prevent us from turning it off, and the most successful weapon in its arsenal has been its campaign to prevent us from considering any alternatives.

I might not have put it that way, but I agree that this is what market competition will likely achieve. What’s more, the solutions that might be posited to allow it to do so without leading to, say, a revolution don’t seem to address the issue.

Many people think that A.I. will create more unemployment, and bring up universal basic income, or U.B.I., as a solution to that problem. In general, I like the idea of universal basic income; however, over time, I’ve become skeptical about the way that people who work in A.I. suggest U.B.I. as a response to A.I.-driven unemployment. It would be different if we already had universal basic income, but we don’t, so expressing support for it seems like a way for the people developing A.I. to pass the buck to the government. In effect, they are intensifying the problems that capitalism creates with the expectation that, when those problems become bad enough, the government will have no choice but to step in. As a strategy for making the world a better place, this seems dubious.

The point here is that we have people who sit atop the power-hierarchy in society, and they are, of course, against things that regulate them from using that power. But when it comes to the threat of something that might move above them in that hierarchy, they are all for dealing with it.

To everyone else, is it reasonable to assume that an AI who takes over at the top (if that were possible) would obviously end up making us any less powerful than we already are? It’s not obvious, and that leads me to be more cautious about cutting off that option.