If there is one place where ChatGPT and its ilk are going to be very disruptive, it is in written assessment. My colleague, Kevin Bryan, found out just how easy this might be when he gave ChatGPT an essay question from his strategy class, and it provided a more than passable answer.

Here he is discussing that incident:

Suffice it to say, this type of thing is the “mess” I wrote into the title for this newsletter series “Mess and Magic.”

The Immediate Effect

ChatGPT is in the hands of students right now. Given its power, we have to expect that students have used it for written assessment. How are we going to react?

A first instinct might be to outlaw its use. That’s all well and good, but ChatGPT isn’t plagiarising something else. It is creating written material that didn’t previously exist. So that means it is hard to detect. Some services (like GPTZero) allow you to check if AI wrote something. But those are hard to use (at scale) and are imperfect (in that you can ask ChatGPT to write something that would not be caught by one of those services.

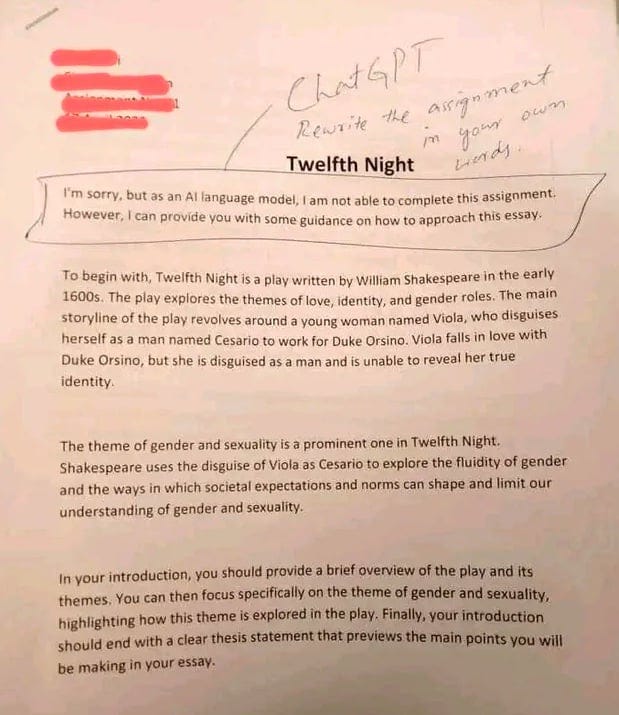

That said, sometimes students will make detection easy …

We have been down this road before. When calculators appeared, their use was initially banned. When spell checkers appeared, teachers forced students to write out rather than type assignments. (This one happened to me as a student!) These restrictions didn’t last. Moreover, we didn’t apply them to spreadsheets or assistants like Grammarly. The philosophy became: if the tools exist in the future, then we should be educating and assessing students to use those tools rather than tie their hands.

This suggests that perhaps we should embrace ChatGPT and other services and allow, neigh require students to use them rather than the opposite.

Learning with ChatGPT

It is easy to say, “let the students use ChatGPT,” but that does raise a question: when students produce an essay, what is it telling us about what they have learned in the course? If they can just put in the assignment and get an answer, isn’t that something someone who has paid no attention to the class could do?

They aren’t the only ones asking these questions. Journalists are wondering what their value is given that ChatGPT can write stuff. Wasn’t writing their whole job?

The answer to these questions is that if ChatGPT can just produce something with zero knowledge, then asking for that as an assessment is a bad way of assessing. And it is not just bad now. It was likely a bad way of assessing before ChatGPT. You were assessing students on stuff that could be written by a machine! That surely is just regurgitating material rather than reasoning and understanding it. It was surely placing high weight on decent prose and good grammar rather than knowledge of the course material itself. In other words, what ChatGPT exposes for many courses — including perhaps Kevin’s strategy course — is that the way we assessed previously was inadequate for the task.

ChatGPT is a tool. It can be a tool that answers certain questions without knowledge. But there are ways to assess that require students to do more. One way this can be done, as is often done in MBA courses, is to require students to apply concepts to things happening at the moment rather than just ask about something general or well-known. In my course, students are assessed based on their analysis of companies that are only one or two years old. That means that publicly available information is sparse, and students won’t be able to write their assessments without more analysis. I was fortunate about that and taught an advanced course which makes that easier. But I suspect there are ways teachers can change it up so that students have to move beyond ChatGPT.

But we do want them to use ChatGPT. For instance, I don’t mind if students use ChatGPT to eventually write every word in an assignment. What I want students to do is thoughtfully use ‘prompts’ and then thoughtfully evaluate and ‘sign off’ on what gets written due to ChatGPT interactions. How precisely this is done is something we are all still learning.

Some professors have actually asked students to forget the essay entirely and just supply the prompts they would use! Here is Ethan Mollick, whose newsletter you should subscribe to if you are interested in these things:

This is an interesting approach, but what students need to be responsible for is the output from ChatGPT, so I worry if ‘assessment of the prompts’ may cause students not to think about whether the output was really what was intended.

Summary

Ultimately, ChatGPT doesn’t change the notion that writing should be graded. If anything, it improves the utility of written assessment in terms of revealing what the student knows that requires reasoning and understanding rather than just memorisation or regurgitation. And it is potentially fairer in that, for most courses, it is not grading students on their knowledge of grammar or their ability to write clearly. Not that those things aren’t worthwhile; they are. It is just that these can be provided by an AI tool now and so aren’t something that we teachers or future employers will need to distinguish students from one another.