This week, a new paper came out documenting how LLM use might be eroding cognitive skills. It was from MIT, no less. Near as I can tell, your reaction to this paper was completely a function of confirmation bias. If you believed ChatGPT was ruining minds you said “a ha, see!” and if you didn’t you said “the sample size by the end was effing 18!” No updating occurred as a result of this study.

At the same time, this piece from the NYT came out informing us that the radiologists are doing just fine. This piece was a reaction to a statement made by Geoff Hinton, whom the NYT will remind you is the “father of AI,” back at a conference here at the Rotman School in 2016, arguing that we should stop training radiologists right then. Interestingly, no one listened because if they did, we would have quite the shortage of radiologists now, as we are employing more of them than ever. Oh, and the radiologists are really into AI.

Dr. Potretzke has collaborated on an A.I. tool that measures the volume of kidneys. Kidney growth, when combined with cysts, can predict decline in renal function before it shows up in blood tests. In the past, she measured kidney volume largely by hand, with the equivalent of a ruler on the screen and guesswork. Results varied, and the chore was a time-consuming. …

Today, she brings up an image on her computer screen and clicks an icon, and the kidney volume measurement appears instantly. It saves her 15 to 30 minutes each time she examines a kidney image, and it is consistently accurate.

“It’s a good example of something I’m very comfortable handing off to A.I. for efficiency and accuracy,” Dr. Potretzke said. “It can augment, assist and quantify, but I am not in a place where I give up interpretive conclusions to the technology.”

AI won, and so did radiologists.

Back to that MIT study. Here’s what they did: they gave some people ChatGPT to use, and others did not have it. They then scanned their brains over the course of some months. Their conclusion:

As the educational impact of LLM use only begins to settle with the general population, in this study we demonstrate the pressing matter of a likely decrease in learning skills based on the results of our study. The use of LLM had a measurable impact on participants, and while the benefits were initially apparent, as we demonstrated over the course of 4 months, the LLM group's participants performed worse than their counterparts in the Brain-only group at all levels: neural, linguistic, scoring.

You might be thinking: what was the thing that the people in this study had to do? The answer: write an essay. Some were given ChatGPT-4o, some were allowed to search the web, and others were ‘Brain-only’ and left to form words with only what was in their head. For example, some were given the prompt:

2. From a young age, we are taught that we should pursue our own interests and goals in order to be happy. But society today places far too much value on individual success and achievement. In order to be truly happy, we must help others as well as ourselves. In fact, we can never be truly happy, no matter what we may achieve, unless our achievements benefit other people.

And then had a bunch of wires strapped to their head, sat at a computer and were told to write an essay on “[m]ust our achievements benefit others in order to make us truly happy?” Personally, I don’t know how searching the web might have helped with that one, nor how happy the ‘Brain only’ folks were doing the task, but I know I would have been much happier if I had ChatGPT to save me thinking through this.

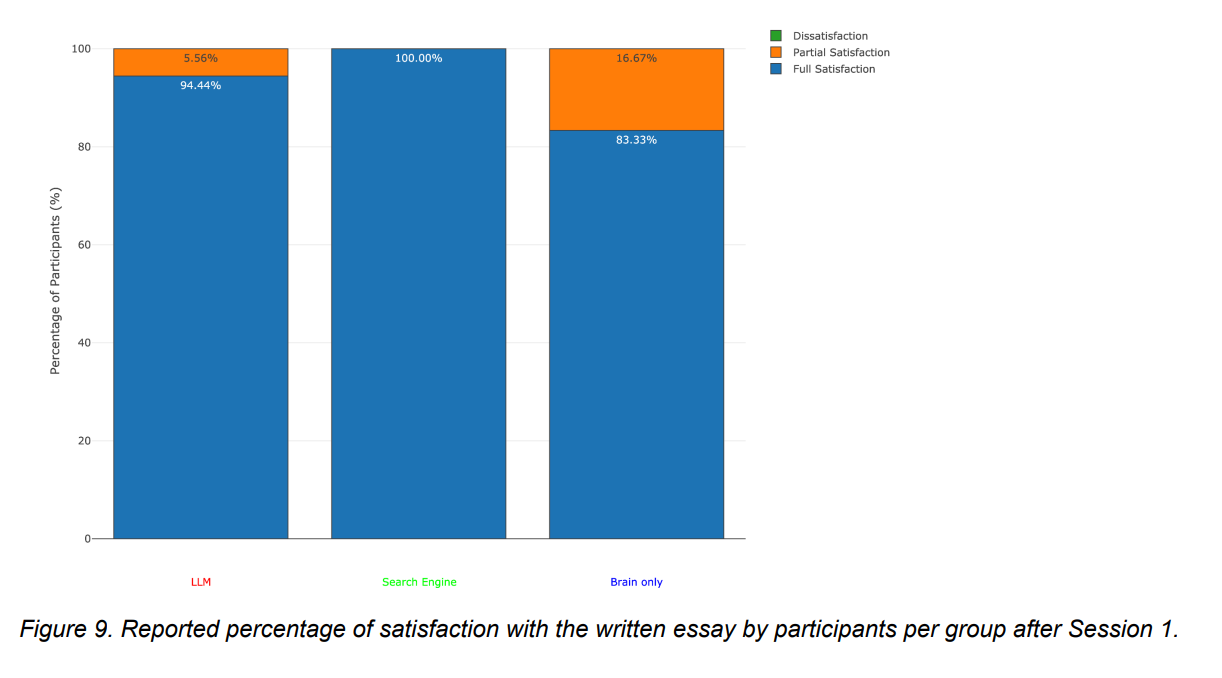

Anyhow, participants who went through the whole program ended up writing four essays like this. But right at the start, those using ChatGPT couldn’t really recall what they had written, which is presented as a cost, although I’m not sure. By the time they had written four essays, the ChatGPT folks could remember nothing from their first one, while everyone else started forgetting too. As if to reinforce my point, when asked how happy they were with their work, there wasn’t much variation.

Anyhow, this is to give you a sense, but I can’t do justice to the over 200 pages of paper in this study. I cannot fault them for the effort in writing this.

But what should we take away from this? If we go to the conclusion of the paper, it starts:

As we stand at this technological crossroads, …

I get it, this was a long study. It goes on …

The LLM undeniably reduced the friction involved in answering participants' questions compared to the Search Engine. However, this convenience came at a cognitive cost, diminishing users' inclination to critically evaluate the LLM's output or “opinions” (probabilistic answers based on the training datasets). This highlights a concerning evolution of the 'echo chamber' effect: rather than disappearing, it has adapted to shape user exposure through algorithmically curated content. What is ranked as “top” is ultimately influenced by the priorities of the LLM's shareholders.

Wait, what? Did anyone on this study read this conclusion? I started to wonder. So I asked Gemini 2.5 Pro which was the fastest LLM willing to digest the whole paper if it thought parts of it had been written by AI.

In conclusion, this paper appears to be a hybrid product of human intellect and AI assistance. The human researchers designed the experiment, conducted the analysis, and provided the core interpretations and insights. An LLM was likely leveraged as a sophisticated tool to draft literature-heavy sections, generate summaries, and structure the overall manuscript. This approach makes the paper itself a compelling case study in the evolving landscape of academic writing and the very "cognitive offloading" it explores.

Wow. Ironic burn. Impressive. That said, there were only some parts of the paper that it thought were likely AI-written.

But back to that conclusion. The study conducted an experiment and showed that there were different outcomes when you used ChatGPT or not for this task. That is interesting. But the entire over-the-top reaction regarding mind manipulation, degradation and the hit on capitalism seems out of place and leads me to wonder how much I can trust the whole study. More importantly, I could not work out if any of the outcomes were a bad thing. The problem with the essay writing prompt is that it seemed to me a “make work” task. And I would be surprised if the undergrads who participated in the study thought differently. In that respect, the more ChatGPT reduced cognitive load and didn’t actually impact on the participant, arguably the better.

What I would like to see is either (a) real long-term output effects on true productive tasks and/or (b) effects on tasks that people would otherwise find satisfying. We need to start thinking about how AI impacts on our lives and not simply whether it changes how we think. Let’s face it, it is supposed to do that.