It has been a little while since I wrote a newsletter. The reason is simple: there has been a major productivity shock to other things I do, namely research, which arrived with GPT-o1 and GPT-o1-pro. Thus, the opportunity cost of this activity, which AI has not impacted (it is pretty much the only AI-free thing — well, save a grammar checker — I do at work these days), has gone way up.

But I wanted to pop back into your inbox after reading this New York Times article. It concerns the views of Sebastian Siemiatkowski, the chief executive of Klarna, a company that allows consumers to defer payments on goods. Anyhow, he is big on AI:

Over the past year, Klarna and Mr. Siemiatkowski have repeatedly talked up the amount of work they have automated using generative A.I., which serves up text, images and videos that look like they were created by people. “I am of the opinion that A.I. can already do all of the jobs that we, as humans, do,” he told Bloomberg News, a view that goes far beyond what most experts claim.

He goes on:

According to Klarna, the company has saved the equivalent of $10 million annually using A.I. for its marketing needs, partly by reducing its reliance on human artists to generate images for advertising. The company said that using A.I. tools had cut back on the time that its in-house lawyers spend generating standard contracts — to about 10 minutes from an hour — and that its communications staff uses the technology to classify press coverage as positive or negative. Klarna has said that the company’s chatbot does the work of 700 customer service agents and that the bot resolves cases an average of nine minutes faster than humans (under two minutes versus 11).

Mr. Siemiatkowski and his team went so far as to rig up an A.I. version of him to announce the company’s third-quarter results last year — to show that even the C.E.O.’s job isn’t safe from automation.

Suffice it to say, you have to be pretty confident about this stuff to say all of this while you still have actual employees; they have 4,000 down from 5,000 with a target to reach 2,000. And he really doesn’t think there will be anything left for them to do:

In interviews, Mr. Siemiatkowski has made clear he doesn’t believe the technology will simply free up workers to focus on more interesting tasks. “People say, ‘Oh, don’t worry, there’s going to be new jobs,’” he said on a podcast last summer, before citing the thousands of professional translators whom A.I. is rapidly making superfluous. “I don’t think it’s easy to say to a 55-year-old translator, ‘Don’t worry, you’re going to become a YouTube influencer.’”

If this isn’t a — “capital is going to win” — scenario, I don’t know what is.

In case you are wondering, this view doesn’t come out of the blue. If you read the article, you will find that Siemiatkowski has had some labour relations troubles recently.

But here’s the thing: Siemiatkowski bases his plans on the improvements in Generative AI. So, this isn’t a software play of automating routine tasks. Instead, it is a belief that AI agents based on generative AI will be sufficiently flexible and reliable to replace their people. But I think this is a pipe dream precisely because generative AI is so uniquely human.

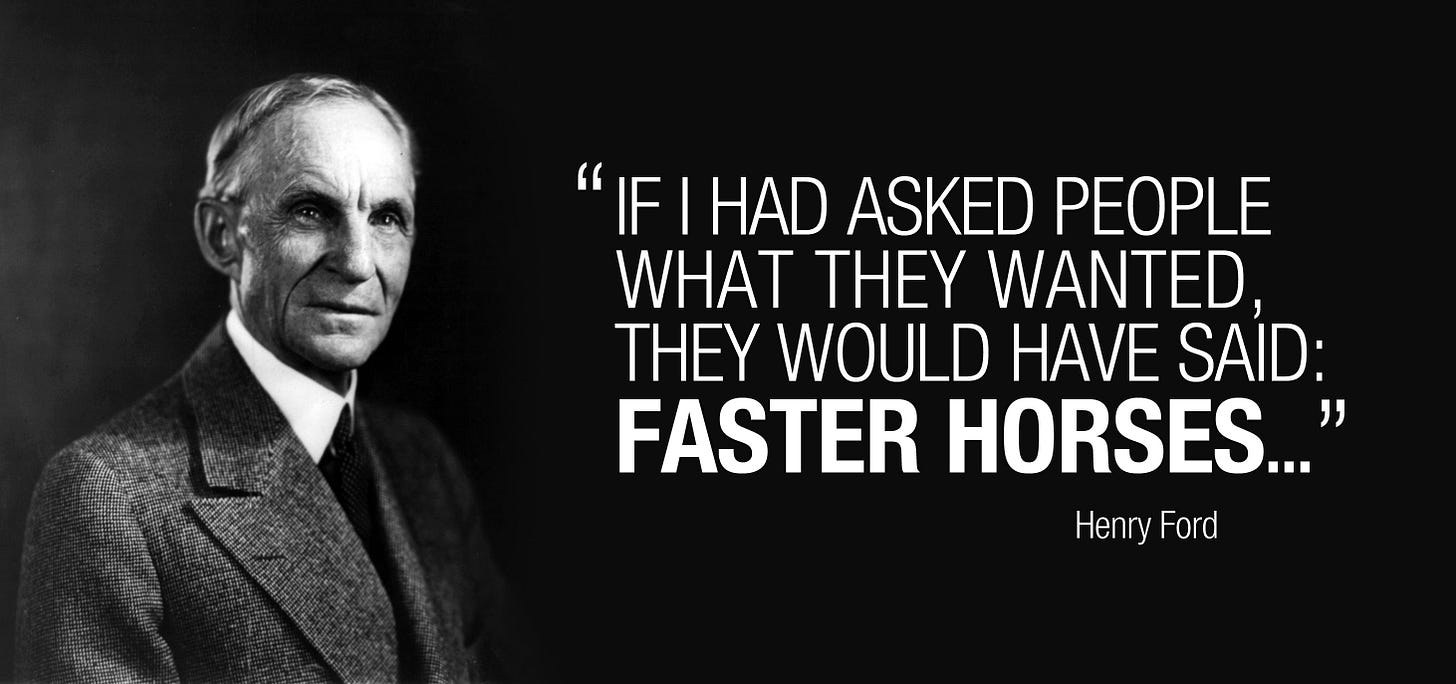

Let me explain that statement as it sounds paradoxical. Back when the car was in its infancy, Henry Ford famously claimed that if he asked his customers what they wanted, they would say, “a faster horse.” A car wasn’t that. It wasn’t faster, for starters. It was something different.

Siemiatkowski, like so many other CEOs who struggle with managing people, when asked what they want from AI, is effectively saying, “a faster and cheaper human.” And generative AI is delivering just that. Generative AI is trained on human stuff. It, therefore, does human stuff. It is just cheaper and faster.

But that also means that it is nothing without guidance and input — just as people are. The vast majority of people work for others in one way or another. How they know what to do is being told by others or, in some cases, by the market. This is because work is a traded activity and there are few people who do no work. That means that AI needs to be managed by people. Thus, generative AI agents perform best when working with people just like people perform best when working with people not like them. The idea that you can wipe out entire ventures sans human employees and replace them all with generative AI agents is a pipedream. The generative AI agents need monitoring, they need guidance, they need management. And the more of them there are, the more management they are going to need.

Some AIs can be developed that can be different from faster humans. For instance, Eric Topol today wrote about radiology (again) and how using AI that is purely prediction (i.e., not prediction based on humans but straight-out prediction) can actually perform better when humans are thrown out of the loop. These AIs are not just faster humans; they are developed differently, and so, for these particular tasks, they can be made to work better without people — at least people as they are currently trained. This is because the people are either not needed or do not yet know how to manage such AI.

The upshot is that we want faster humans, but they will only be useful if we manage them.